Understand CNAPPs with Our Guide

Understand CNAPPs with Our Guide

In our current times of cloud computing, you probably have heard of both virtual machines (VMs) and containers. They both have a role to play, either in cloud computing or more traditional data centers (including on-premises data centers). This article will explain the differences between the two, compare and contrast them, and review some use cases showing where one or the other is preferable.

Technical Difference in a Nutshell

Let’s get the technical differences out of the way first. For this, we need to understand what an Operating System (OS) kernel is. The kernel is responsible for all the low-level software stuff, such as:

- Fairly allocating CPU time to process

- Managing the RAM

- Granting access to hardware devices, such as hard disks (magnetic or SSD), keyboards, mouses, displays, network interfaces, etc.

- Providing a layer of abstraction to access files on hard drives, which is commonly known as “file systems”

- Handling inter-process communications

- Implementing the various network layers of the network stack (IP, TCP, UDP, etc.)

- And many other aspects too long to detail here

That’s quite a lot, and indeed kernels (such as the Linux kernel) are quite large, representing millions of lines of code.

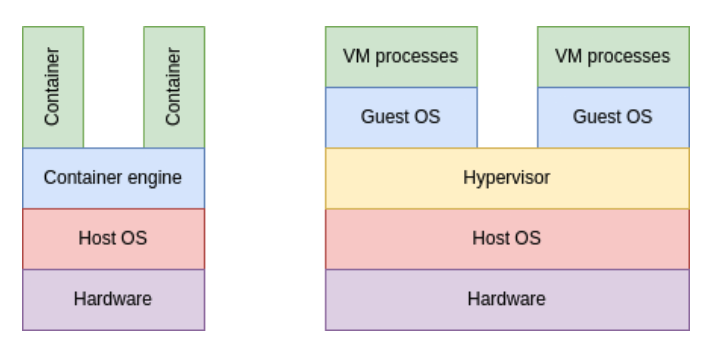

So, what is the main technical difference between a VM and a container? A VM runs its own kernel while a container uses the kernel of its host. A little diagram can visually show this:

Figure 1: Containers vs. VMs

Figure 1: Containers vs. VMsSome people call containers “lightweight VMs,” which is quite incorrect because they have far fewer processes (and very often just one process) running inside them. Running a full operating system inside a container is very difficult and oftentimes just impossible.

On the other hand, a VM will “believe” that it is an entire operating system on its own, running on some real hardware. It is quite unaware that this hardware is actually simulated through a piece of software running inside the host kernel, called the hypervisor.

About VMs

You can see a VM as being an OS (the guest OS) running on top of another OS (the host OS), which itself runs on the actual hardware. In order to be able to run VMs, the host OS requires a hypervisor. This provides a layer of virtualization complete with some simulated hardware, so the guest OS will believe it is running on top of some real hardware. The guest OS otherwise behaves exactly like a standard OS.

Good to know: When you run an instance in a cloud vendor, the cloud vendor actually spins up a VM.

The advantages of using VMs are:

- The guest OS behaves exactly like a standard OS and has no knowledge that it is being run on a hypervisor rather than real hardware.

- A VM can be “saved” as a single file and simply moved or replicated somewhere else.

How about the disadvantages:

- A VM is bulky (in terms of disk space, CPU, and RAM usage); this is because it is a full OS on its own.

- A VM requires a lot of maintenance, again because it is a full OS; note that you can avoid this problem by designing your infrastructure to be immutable.

- A VM is slightly slower than the host OS because of the hypervisor layer, although nowadays this is very minimal.

- A VM is, generally speaking, a bit slow to start because it contains many processes and services.

About Containers

Now let’s explore containers. A good way to understand containers is to see them as one or more processes running on the host kernel. But the host kernel keeps them in their own isolated environment, meaning these processes can’t see anything else running on this kernel. The processes inside a given container can see each other, but they can’t see processes running inside other containers or the host kernel.

For the host kernel to be able to run containers, it needs the necessary software technology to be able to segregate processes as described above. The Linux kernel provides this tech, which is called “namespaces.”

Now you can see that it is impossible to run a full OS inside a container because there is only one kernel running: the one from the host OS. Consequently, containers should not be seen as “lightweight VMs,” but more like closed boxes containing processes. Processes can run inside their box, but they can’t go outside of their boxes into other boxes.

Without going into detail, it’s useful to know that the host kernel can be tweaked so that processes in a given container can “see” and “touch” things that are outside their box. But this is a big security risk and should be avoided as much as possible.

So what are the advantages of using containers?

- Containers are “light,” in the sense that they run only the processes required for the given workload, meaning you save on CPU, RAM, and disk space compared to VMs.

- Containers usually start quickly, but this depends on the software they run; at any rate, they start faster than the same software running inside a VM.

- There are public registries that provide a vast number of container images for a lot of applications, such as Docker Hub.

- The attack surface is smaller compared to VMs.

There are, however, drawbacks to using containers:

- You can’t run a full OS inside a container.

- In theory, an exploit in one container can lead to exploits in other containers. However, this is very rare these days because the Linux namespace tech (which is used to run containers) is now very mature and secure.

- You can’t save a container into a single file and move it around.

- The root filesystem is ephemeral, i.e., everything you write in the root filesystem will be destroyed when you terminate the container.

Contrasting VMs and Containers

Now let’s move on to the practical differences between VMs and containers. Obviously, the first thing to note is that you can run containers inside VMs, but not the other way around.

VMs and containers serve different purposes. A VM runs a full OS and is designed to be used for the long term. On the other hand, a container runs a specific workload and is ephemeral and immutable in nature. A consequence of this is that if your containers require long-term storage (i.e., persistent storage that will survive when the container is restarted, for example), it needs to mount volumes, which need to be declared and managed separately.

Generally speaking, containers are more nimble to work with than VMs because they are designed to be focused on a single task. In fact, when your use case requires VMs, it would usually be hard to use containers instead. However, when your use case can use containers, you can typically choose between containers and VMs (although the architecture would be quite different between these two options).

You most probably have heard of container orchestration tools, like Kubernetes. If using containers, you will probably need such a tool, which comes with its own learning curve and security considerations. If using VMs from your cloud vendor, you will usually just need the management tools provided by that vendor, such as autoscaling groups and load balancers. If you are using VMs in a traditional data center, then you will probably need a VM-oriented orchestration tool like VMware vSphere, which would come again with its own learning curve (arguably even steeper than container orchestration tools).

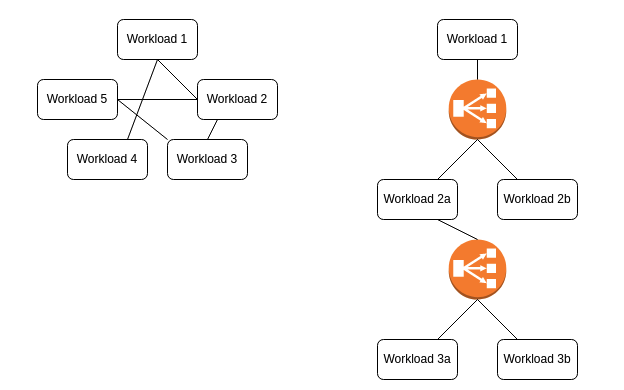

Finally, containers are more suited to set up a service mesh, an alternative architecture to using internal load balancers.

Figure 2: Service mesh vs. load balancers

Figure 2: Service mesh vs. load balancersWe can intuitively see from the diagrams above that the second architecture is more resource-intensive than the first. It is possible to create a service mesh, for example, by using HashiCorp Consul, but it is more cumbersome and difficult to configure than using, say, Istio, on a Kubernetes cluster.

Security Aspects

Although superficially similar, VMs and containers present very different security challenges. First of all, the software layers that enable both techs (hypervisors and kernel namespaces, respectively) are now very mature and very secure, so these shouldn’t be of any concern for either of them security-wise.

VMs essentially run an entire OS, so all the steps required to secure a whole system are required for a VM, such as:

- OS hardening

- Implementing antivirus software

- Regular and timely patching (can be avoided if using immutable infrastructure)

- Running of processes as regular users rather than root

- Hardening the SSH server

- Configuring the firewall

- Reducing the attack surface (removing unneeded software)

- Using scanners to identify vulnerabilities when building the image

- Adopting tools to identify vulnerabilities at runtime

As we can see from this list, securing a VM is hard work. A lot of this is done when building the image that will be used to run the VM, but a significant amount of work is still done at runtime to detect vulnerabilities and a compromised system.

In contrast, securing a container is easier:

- Ensure you don’t run the container in privileged mode.

- Run the process(es) as a regular user, not root.

- Ensure filesystem permissions are as restrictive as possible.

- Use scanners to identify vulnerabilities when building the image.

There are still runtime security aspects related to running containers, but they are usually easier to handle and implement than for VMs.

In both cases, all the steps described for securing the host OS in the VM case above also apply to the host OS that will run the VMs/containers.

CrowdStrike can provide you with various solutions to protect your workloads from viruses and other malicious software, as well as network attacks.

The difference between VMs and containers is quite easy to understand at a technical level. The contrast between them becomes more apparent and interesting when looking at the use cases relevant to each.

Since Docker has been created, the use of containers has steadily increased. They are nimble and enforce immutability. Plus, combined with a powerful orchestrator such as Kubernetes, they have significant advantages over VMs. This is why containers are slowly taking over, although some organizations will continue to use traditional VMs for a long time.