In part one of our Python Logging Guide Overview, we covered the basics of Python logging, which included the default logging module, log levels, and several Python logging best practices.

Now, in part two, we’ll build on those basics to cover more advanced Python logging topics:

- Logging to multiple destinations

- Python tracebacks and exceptions

- Structured versus unstructured log data

- Using

python-json-loggerto structure log data as JSON objects

If you’re new to Python logging, we recommend reading Part One and brushing up on general logging best practices before proceeding. If you’re already up to speed, let’s dive in!

Learn More

- Part 1: The Basics

- Part 2: Advanced Concepts

- Part 3: Centralized Python Logs

- Part 4: Logging with Django

Logging to Multiple Destinations

In part one, we demonstrated how you can log to single destinations such as:

- The console

- A file

- Systemd-journald

- Syslog

Any one of those may be sufficient for basic use cases. However, you may have a scenario in which you need to emit log messages to multiple destinations. Logging to the console and a file is a common example, so let’s start there.

In general, you can target multiple logging destinations by specifying different log handlers. basicConfig provides a simple way to specify multiple handlers. In the script below, we use basicConfig to log the output to the console (stderr) and to a log file called PythonDemo.log.

# import loggingimport logging

# Use basic config to send logs to a file and console

logging.basicConfig(handlers=[logging.FileHandler("PythonDemo.log"),logging.StreamHandler()])

# Emit a Warning message

logging.warning('You are learning Python logging!')

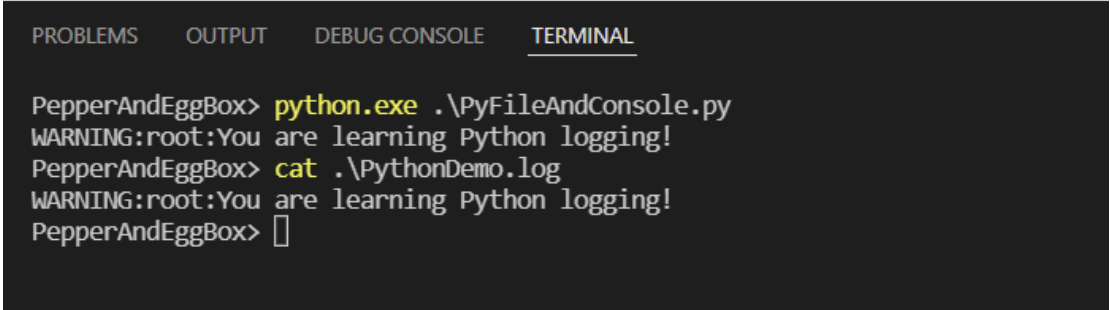

When you run the script, you should see this output in the console:

WARNING:root:You are learning Python logging!

At the same time, the log file you specified will contain the same text:

With this same approach, we can log to other destinations and handle more advanced use cases as well. For example, borrowing from the official Python Logging Cookbook, we can build a script that logs messages with a level of DEBUG and above to a file. At the same time, we can log those with a level of WARNING and above to the console. The code to do this looks like this:

# import loggingimport logging

# Use basic config to send logs to a file at DEBUG level

logging.basicConfig(level=logging.DEBUG, filename='PythonDemo.log', filemode='w')

# Create a StreamHandler and set it to WARNING level

console = logging.StreamHandler()

console.setLevel(logging.WARNING)

# Add the console handler to the root logger

logging.getLogger('').addHandler(console)

logging.debug('This is a debug message!')

logging.warning('Danger! This is a warning message')

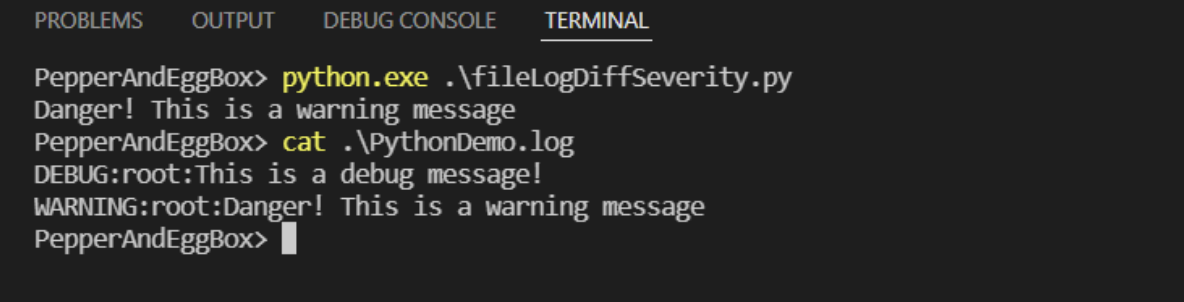

When you run that script, only the WARNING-level message is logged to the console. However, the log file contains both the DEBUG and WARNING messages.

Understanding Exceptions and Tracebacks in Python

Often, you log messages so you can debug unexpected failures. Understanding exceptions and tracebacks are two of the most important aspects of debugging in Python.

What is a Python exception?

A Python exception is an error that occurs during the execution of a program. Exceptions disrupt the normal operation of a Python program. If the program does not have a handler for the exception—for example, by using a try statement—then it will exit.

What is a Python Traceback?

A Python traceback is a report that contains function calls from a specific point in a program. The content in a Python traceback is similar to traditional stacktraces (sometimes called backtraces), except the most recent function calls are at the bottom of the trace. This difference adds some context to the famous Traceback (most recent call last): messages you may see when a Python program “errors out.”

To understand how tracebacks work, let’s create one. The script below attempts to divide the integer 1 by the string egg:

# Do some bad math1 / "egg"

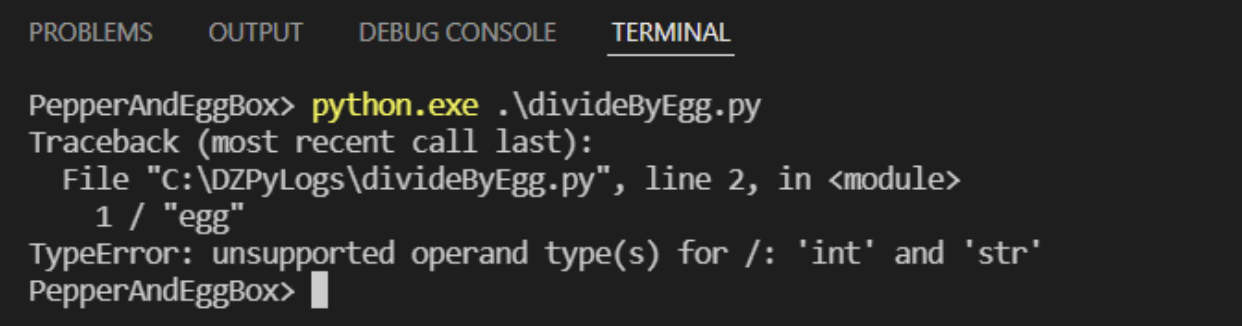

When you run this script, you should see an error similar to this in the console:

Here’s a breakdown of how to read that output:

Traceback (most recent call last): This statement tells us that the traceback of the error is beginning.File "c:PathtoyourscriptdivideByEgg.py", line 2, in <module>1 / "egg": This points us to the specific line where the exception occurred.TypeError: unsupported operand type(s) for /: 'int' and 'str': This explains to us that the exception is a TypeError, which means there was a problem with our data types. In this case, we can’t perform division using an integer ('int') and a string ('str').

How to Log Python Tracebacks

Given that tracebacks provide granular detail on why a program failed, logging them is often useful. The default Python logging module includes a logging.exception function we can use to log exceptions and add custom error messages. The default severity for logging.exception is ERROR.

To catch and log our example traceback, we can wrap it in a try except statement. Below is a modified example of our previous script, now set to log the exception to the console and a log file called BadMathDemo.log.

# Import the default logging moduleimport logging

# Use basic config to send logs to a file and console

logging.basicConfig(handlers=[logging.FileHandler("BadMathDemo.log"),logging.StreamHandler()])

# Do some bad math

try:

1 / "egg"

except:

logging.exception('Your math was bad')

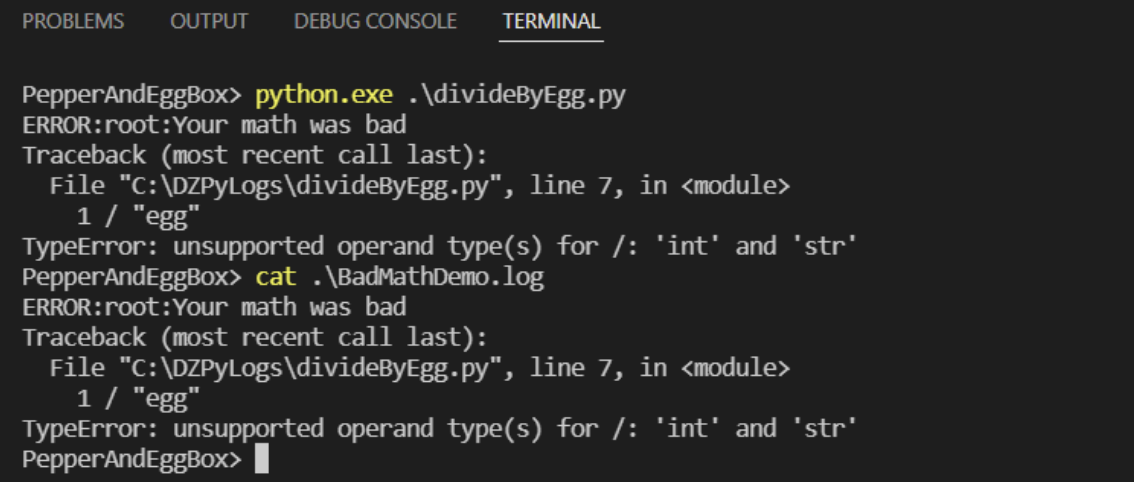

Now when we run the script, we’ll see an error message in the console, and the message includes the traceback. The console output and the contents of BadMathDemo.log should look similar to the following:

Unstructured versus structured data in Python logs

While humans will often read log messages, in many cases other programs need to ingest logs. When we send logs to other programs, the structure—or lack of structure—in the formatting can make a world of difference.

What is unstructured data?

Unstructured data is data that does not follow a specific pattern or module. Strings that are human-readable sentences—like “Oops! An error occurred. Please check your math and try again!”—are examples of unstructured data. People have no problem parsing the meaning, but things get complex when a computer needs to parse them.

What is structured data?

Structured data is data formatted using objects that follow a specific pattern. JSON, XML, and YAML are common examples of structured data formats. By using data objects (as opposed to strings) and following a specific pattern, structured data makes it much easier for computers to read data and to automate and scale log parsing. As of Python 3.2, Python’s default logging module supports structured data.

Learn More

Read Structured, Unstructured and Semi Structured Logging Explained for a deeper look into structured versus unstructured log data.

Read: Structured, Unstructured and Semi Structured Logging Explained.

Using Python-Json-Logger

The python-json-logger is a popular library that makes it easy to emit Python logs as JSON objects. You can install it using pip with this command:

pip install python-json-logger

To use it, we import the json logger and add jsonlogger.JsonFormatter() as a formatter in a program. For example, to make our earlier example use JSON formatting and output to a log file called BadMathDemo.json file, we can use this script:

# Import the default logging moduleimport logging

# Import the json logger

from pythonjsonlogger import jsonlogger

# Get the logger

logger = logging.getLogger()

# Point filehandler to the output file

logHandlerJson = logging.FileHandler("BadMathDemo.json")

# Set the formatter

formatter = jsonlogger.JsonFormatter()

logHandlerJson.setFormatter(formatter)

# Add the handler

logger.addHandler(logHandlerJson)

# Wrap our bad math in a try except statement

try:

1 / "egg"

except:

logging.exception('Your math was bad')

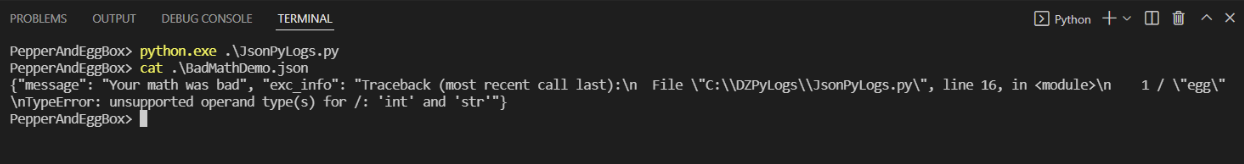

When we run the script, the file should contain a stringified JSON object:

As with other handlers, you can use the configuration file specified by the fileConfig function as well.

Log Everything, Answer Anything – For Free

Falcon LogScale Community Edition (previously Humio) offers a free modern log management platform for the cloud. Leverage streaming data ingestion to achieve instant visibility across distributed systems and prevent and resolve incidents.

Falcon LogScale Community Edition, available instantly at no cost, includes the following:

- Ingest up to 16GB per day

- 7-day retention

- No credit card required

- Ongoing access with no trial period

- Index-free logging, real-time alerts and live dashboards

- Access our marketplace and packages, including guides to build new packages

- Learn and collaborate with an active community