At CrowdStrike, we are relentlessly researching and developing new technologies to outpace new and sophisticated threats, track adversaries’ behavior and stop breaches. As today’s adversaries continue to become faster and more advanced, the speed of enterprise detection and response is paramount.

It is also a challenge for today’s organizations, which face mounting attack volumes amid a global shortage of cybersecurity practitioners. In today’s evolving threat landscape, the intersection of AI and hardware innovation plays a pivotal role in shaping the future of threat detection and response. For CrowdStrike, a global cybersecurity leader, AI has been fundamental to our approach from the beginning.

Technology collaboration and research are essential to deploy new methods of analysis and defense. When Intel contacted us about the neural processing unit (NPU) in the new Intel® Core™ Ultra processors, we were excited to collaborate and explore new possibilities for enabling AI-based security applications on endpoint PCs, such as Dell’s latest generation of Latitude, the industry’s most secure commercial PC.1

The Challenges of Endpoint AI

The CrowdStrike Falcon® platform is engineered to operate in a transparent and near-zero impact manner, allowing seamless deployment to the endpoint.

Across the cybersecurity industry, the deployment of AI and machine learning (ML) models to the endpoint to perform advanced analytics has been limited — despite the advantages that such a configuration would offer. The reality is that most AI models, particularly neural network models for deep machine learning, simply won’t fit inside the performance envelope of an endpoint product. As a result of this technological challenge, many potential endpoint use cases of deep learning have been considered unworkable.

With the addition of the NPU in the Intel Core Ultra processor, we collaborated with Intel to test the feasibility and system impacts of moving large models from the cloud to the endpoint.

Case Study: Detecting Scripts and Fileless Malware Using an NPU-Enabled Convolutional Neural Network Model

Intel Core Ultra processors include an NPU, a purpose-built AI accelerator ideal for offloading inference of AI workloads, including convolutional neural network (CNN) models. CNN models are instrumental in detecting malicious scripts, which are frequently used in fileless malware — an increasingly common technique used in 75% of cyberattacks that gained initial access in 2023.

Today, effective CNN models are not practical to deploy on the endpoint due to CPU overhead. Realistically, when used for script analysis, a large CNN model is currently only able to operate in the cloud to avoid disrupting the user experience. This means that applying this model to an endpoint requires the scripts to be uploaded to the cloud for analysis — a severe limitation in its application to endpoint detection.

To assess Intel’s NPU capabilities, we collaborated on a stress test using a large, non-optimized experimental CNN model developed to detect malicious scripts. The aim was to evaluate whether the NPU could effectively minimize CPU overhead in an extreme scenario, providing insights into the viability of deploying smaller production-ready endpoint models.

The Intel team helped test this experimental model using both CPU only and CPU+NPU directly on an Intel Core Ultra processor. We found that, when running in continuous mode, approximately 20% of systemwide CPU capacity was used running the model in CPU-only mode.2 However, CPU usage drops to less than 1% when using the NPU. This breakthrough highlights the potential for more practical and efficient endpoint AI deployment.

| Inference Device | CPU Utilization — Maximum | Memory Utilization | Compiled Model Size | Average Inference Time after First |

| CPU only | 20% | 1.07 GB | 0.9 GB | ~86ms |

| CPU+NPU | <1% | 1.4 GB | 0.5 GB | ~23ms |

The Benefits of Endpoint AI

The substantial reduction in system impact observed with the utilization of an Intel Core Ultra processor featuring NPU acceleration makes the deployment of a comparable, and likely more compact, model to the endpoint viable. This advancement allows for detections to occur directly on the endpoint. Script analysis can be performed for an initial pass to detect many of the potentially malicious scripts prior to their execution, with cloud-based analysis subsequently doing the heavy lifting for deeper analysis.

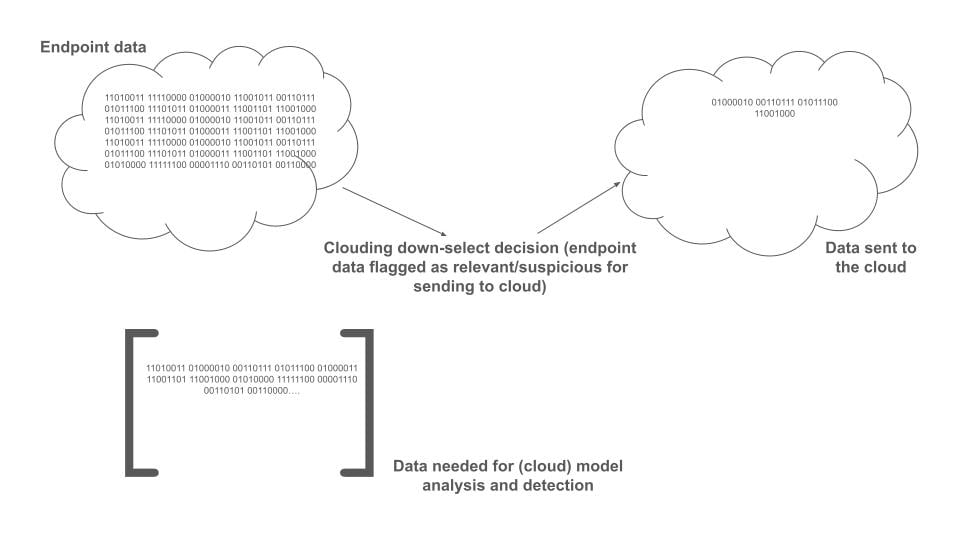

With NPU acceleration, there is also a considerable advantage in being able to use AI to filter large quantities of endpoint data before uploading it to the cloud. This significantly reduces the volume of data that is sent.

Finally, the economic reality of running a large-scale, cloud-hosted AI service underscores the importance of optimizing resource allocation by enabling the cloud models to focus exclusively on wider-view and deeper analysis, enhancing efficiency and efficacy.

The diagram above illustrates the need for a substantial input data set with various parameters to be uploaded to the cloud for successful detection by deep learning models. Hosting the CNN model on the endpoint, with access to the complete set of data, allows for the efficacy of the cloud-hosted model to be augmented using dynamic clouding decisions.

Once input data has been identified as suspicious on the endpoint, it can be more deeply analyzed in the cloud where more resources are available. With advanced AI model deployment to the endpoint now possible using the NPU on Intel Core Ultra processors, the decision of what to send to the cloud can be governed dynamically using the deployed model. This is an extremely powerful capability that offers advantages over the usual approach of using fixed rules to determine what data is uploaded.

Conclusion: The Road Ahead

The limitations of running AI workloads on the endpoint are undergoing a transformative shift with the integration of the NPU in Intel Core Ultra processors. Previously unattainable endpoint deep learning neural network AI becomes not only feasible but highly practical. The NPU shoulders the majority of the inference work, alleviating the processing burden on the CPU and resulting in minimal impact — essentially running the right workloads on the right execution engines.

This breakthrough unlocks a realm of new possibilities, moving endpoint detection closer to the source while sending relevant data to the cloud for better in-depth analysis. Furthermore, the decision to dispatch additional data to the cloud is now AI-driven, replacing fixed rules. This empowers expanded, selective cloud data collection, ensuring scalability and minimizing network traffic.

Leveraging the NPU on Intel Core Ultra processors to deploy CNN models for script and fileless attack detection is an excellent continuation of CrowdStrike and Intel’s joint efforts, in collaboration with Dell, to bring integrated defenses to the deepest levels of the endpoint. However, this is merely one example of an endpoint AI model. Numerous other use cases are conceivable, including endpoint analysis of network traffic, application to data leakage protection and more. We are just beginning to explore the power of pushing AI to the edge for advanced cybersecurity applications using the NPU, aiming to secure the future and stop breaches everywhere.

Co-authors:

This blog was co-authored by Paul Carlson, Lead Data Scientist, Intel; Pramod Pesara, Security Software Architect, Intel

Additional Resources

- Watch Intel and CrowdStrike Enhance Cybersecurity with AI and NPU video

- Read the blog post CrowdStrike and Intel Corporation: Addressing the Threat Landscape Today, Tomorrow and Beyond

- Get a full-featured free trial of CrowdStrike Falcon® Prevent and see how true next-gen AV performs against today’s most sophisticated threats.

1. Based on Dell internal analysis, September 2023. Applicable to PCs on Intel processors. Not all features available with all PCs. Additional purchase required for some features.

2. Technical disclaimers: These results should not be taken with the understanding that the ratio of CPU usage, memory usage, or inference time between CPU only and CPU+NPU will remain the same with a different/smaller model – even one using the same neural architecture as the model under test. This is also not a general claim about the performance of other models on the NPU, even other CNNs. The result should only be taken as an understanding that, for this specific CNN model developed by CrowdStrike, a smaller distilled model of the same design would likely have an average inference time after the first of <= ~25ms and a CPU overhead <= ~1%. Note that featurization/tokenization were not measured as part of this test. The model was tested using the OpenVINO framework. Results that are based on pre-production systems and components as well as results that have been estimated or simulated using an Intel Reference Platform (an internal example new system), internal Intel analysis or architecture simulation or modeling are provided to you for informational purposes only. Results may vary based on future changes to any systems, components, specifications or configurations.

![Helping Non-Security Stakeholders Understand ATT&CK in 10 Minutes or Less [VIDEO]](https://assets.crowdstrike.com/is/image/crowdstrikeinc/video-ATTCK2-1)

![Qatar’s Commercial Bank Chooses CrowdStrike Falcon®: A Partnership Based on Trust [VIDEO]](https://assets.crowdstrike.com/is/image/crowdstrikeinc/Edward-Gonam-Qatar-Blog2-1)