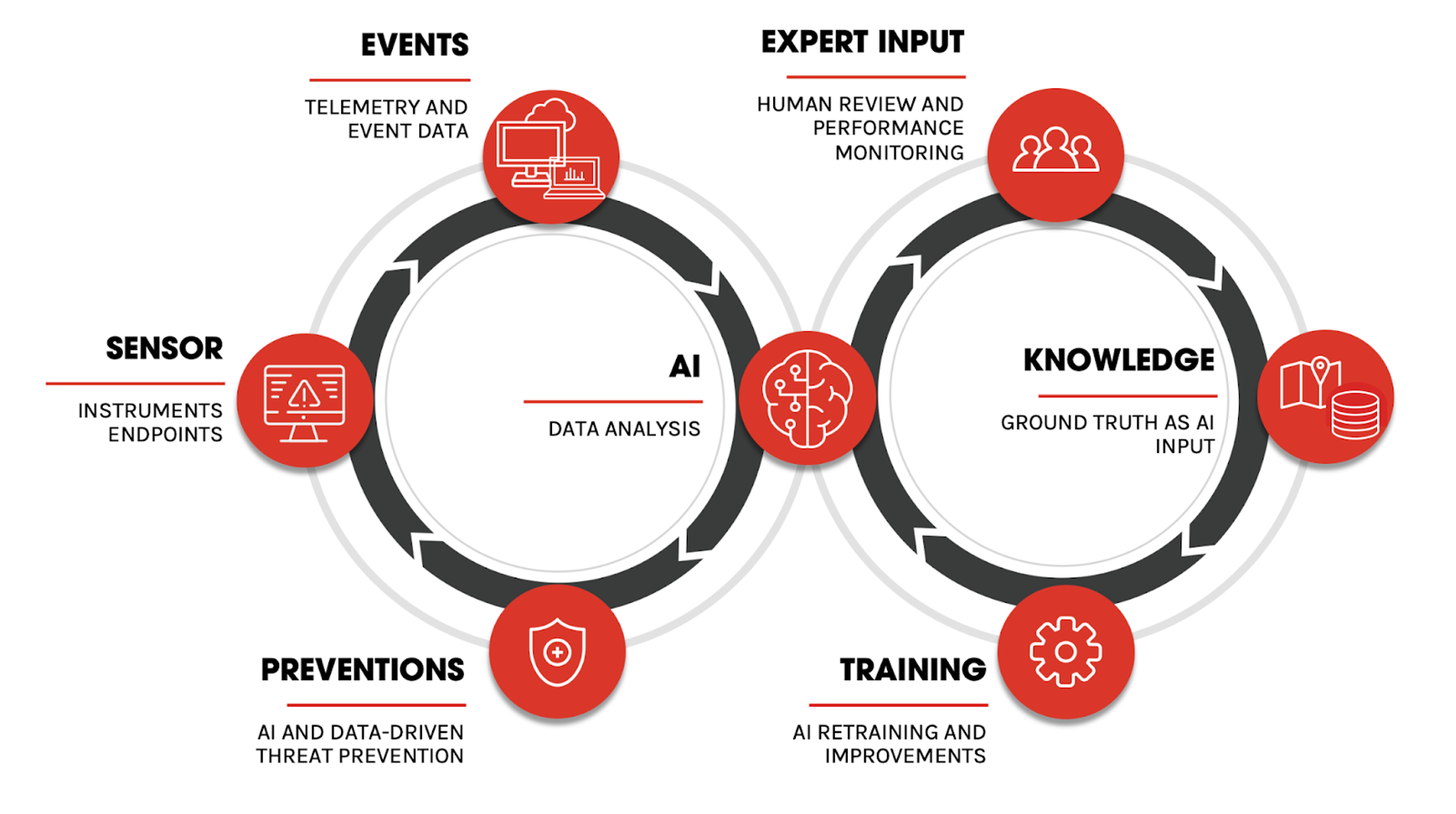

The CrowdStrike Security Cloud processes over a trillion events from endpoint sensors per day, but human professionals play a vital role in providing structure and ground truth for artificial intelligence (AI) to be effective. Without human professionals, AI is useless.

There is a new trope in the security industry, and it goes something like this: To keep yourself safe, you need an AI-powered solution that can act on its own, and to do that, you need to keep those pesky humans away from it. As a practitioner with a track record of bringing AI to cybersecurity — not because marchitecture demands it these days but because of its actual utility to solve security problems — I find this characterization puzzling. If this sounds controversial to you, then note that it only happens to be so in the cybersecurity industry. This characterization is entirely uncontroversial among AI and machine learning (ML) researchers. And even in other industries, leveraging human expertise is entirely normal. How much so? You can purchase services to get your data sets labeled by humans. Some companies even leverage crowdsourced processes to get labels from regular users. You have probably already contributed to such an effort when proving that you’re not a robot to a website.

Fallacies

How did this misanthropic security posture become pervasive? There are two fallacies at play. If you are a glass-half-full person, you could call those misconceptions. But if you focus on the top half of the glass, you might call those misrepresentations. First, artificial intelligence is not, indeed, intelligent. Have a conversation with your smart speaker to reassure you of that fact. AI is a set of algorithms and techniques that often produces useful results. But sometimes they fail in odd and unintuitive ways. It even has its own distinct attack surface that adversaries can leverage if left unprotected. Treating AI as the panacea fixing our industry’s woes is dangerous, as I discussed last year in an invited talk at the workshop on Robustness of AI Systems Against Adversarial Attacks. Second, we are all still jaded from the signature days. Back then, signatures got deployed, initially stopped threats, then started to miss new threats, prompting humans to write new signatures, restarting the cycle the next day. Naturally, this approach is a losing proposition — not only is this model purely reactive, but its speed is also clearly limited by human response time. Of course, this is not how AI models are integrated to prevent threats. No human interaction is needed for an AI model in the CrowdStrike Falcon® platform to stop a threat dead in its tracks. CrowdStrike specifically uses AI to detect threats that have not yet been conceived — without requiring any updates.

Data, Data, Data

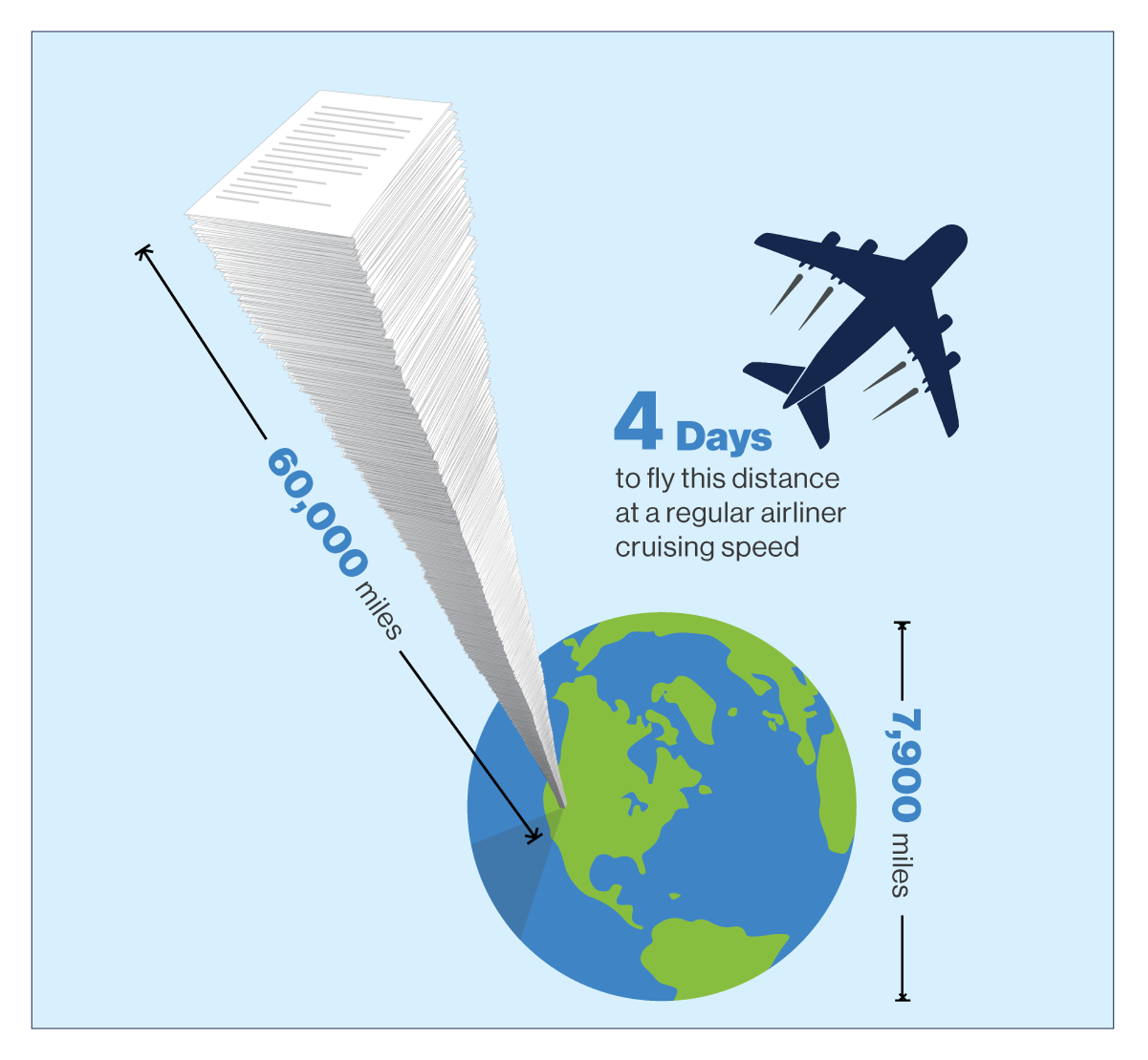

What does it take to train an AI model that can reliably do such a feat? Most importantly, it takes data. And a lot of it. The CrowdStrike Security Cloud processes over a trillion events from endpoint sensors per day. To put this into perspective, a ream of 500 pages of office printer paper is about 50 millimeters thick (about 2 inches). A trillion pages would stack about 100,000 kilometers high, or roughly 60,000 miles. Those are enough miles to earn you gold status every day on most airlines, but it would take you about four days to fly this distance at a regular airliner cruising speed. And after those four days, the stack will have reached the moon.  However, what is essential is that this metaphorical stack is not only tall. The CrowdStrike Security Cloud also has a large footprint covering facets such as endpoint security, cloud security, identity protection, threat intelligence and much more. For each of these facets, we process complex and nuanced data records. All of this information gets contextualized and correlated in our proprietary CrowdStrike Threat Graph®, a large distributed graph database we developed. The Falcon platform was designed from the ground up as a cloud-native system to effectively process this volume of data in meaningful ways. None of this is possible on an appliance. And none of this is possible with hybrid cloud solutions — i.e., those clouds that are merely stacks of vendor-managed rack-mounted appliances. Those make as much sense as streaming video across the internet from a VCR. More data allows us to spot fainter signals. Imagine plotting the latitude and longitude of U.S. cities onto graph paper. Initially, you will see some randomly scattered points. After doing this for a large number of cities, a familiar shape will slowly emerge out of a cloud of points. And that would be the shape of the United States. However, that shape would have never become apparent if everyone had used a “local” graph paper to plot a handful of cities in their vicinity.

However, what is essential is that this metaphorical stack is not only tall. The CrowdStrike Security Cloud also has a large footprint covering facets such as endpoint security, cloud security, identity protection, threat intelligence and much more. For each of these facets, we process complex and nuanced data records. All of this information gets contextualized and correlated in our proprietary CrowdStrike Threat Graph®, a large distributed graph database we developed. The Falcon platform was designed from the ground up as a cloud-native system to effectively process this volume of data in meaningful ways. None of this is possible on an appliance. And none of this is possible with hybrid cloud solutions — i.e., those clouds that are merely stacks of vendor-managed rack-mounted appliances. Those make as much sense as streaming video across the internet from a VCR. More data allows us to spot fainter signals. Imagine plotting the latitude and longitude of U.S. cities onto graph paper. Initially, you will see some randomly scattered points. After doing this for a large number of cities, a familiar shape will slowly emerge out of a cloud of points. And that would be the shape of the United States. However, that shape would have never become apparent if everyone had used a “local” graph paper to plot a handful of cities in their vicinity.

Structure and Ground Truth

So how do humans fit into the picture? If there is so much information piled onto our metaphorical stack of printer paper that even an airliner could not keep up with it, how do humans have a fighting chance to make an impact?

There are two ways. First, stacking the sheets is not the smartest way to organize them. Laying them out flat next to each other results in a paper square of about 250 times 250 kilometers (about 150 miles per side). That is much more manageable — an area like this could be mapped. If we instead organize the reams of paper in a cube, it would be about an 180×180×180 meter cube (or about 600 feet per edge). Notice it’s meters now, no longer kilometers, making it a lot more compact and ready to be charted. The takeaway is that the problem becomes more tractable by organizing data in more dimensions and considering adjacencies. That is the mission of our cloud and Threat Graph. Second, not all data is created equal. There is another type of data to which humans can contribute. We call this type of data ground truth, and it has a significant impact on the training of AI models. Ground truth is the type of data that describes how we want an AI model to behave under certain input. For our metaphorical paper stack, an example of ground truth would be whether a sheet of paper corresponds to a threat (for instance, a red-colored sheet) or benign activities (a green-colored sheet). If you organize your data in meaningful ways, as described earlier, you only need a few colored sheets to deduce information for whole reams of paper as well. Imagine you pull a sheet out of a ream somewhere in our paper cube, and it happens to be red. The other sheets in that ream are likely red, too. And some of the adjacent reams will also mostly have red paper in them. That is how certain types of AI learn: They figure out how to react to similar (adjacent) inputs based on ground truth — this is called supervised learning. Supervised learning is a powerful way to create highly accurate classification systems — i.e., systems that have high true positive rates (detecting threats reliably) and low false positive rates (rarely causing alarms on benign behavior). Not all learning needs to be conducted using ground truth (the domain of unsupervised learning is concerned with other techniques, for example). But as soon as it is time to evaluate whether such an AI system works as intended, you will need ground truth too. Lastly, since ground truth is often a rare commodity, rarer than other data, other techniques blend these two approaches. In semi-supervised learning, an AI is trained on large amounts of data in an unsupervised way, and then it is tuned using supervised training using less ground truth. In self-supervised learning, the AI takes clues from structure in the data itself.

Humans, Humans, Humans

At CrowdStrike, we designed our systems to maximize ground truth generation. For example, whenever CrowdStrike Falcon® OverWatch™ threat hunters find an adversary on the network, those findings become new ground truth. Similarly, when the OverWatch experts evaluate suspicious activity as benign, it also becomes ground truth. Those data points help train or evaluate AI systems. We generate data of this kind at scale every day using our vantage point in the cloud. This allows us to train better models and build better systems with better-understood performance characteristics. AI systems can also flag incidents where ground truth is sparser and a higher level of uncertainty exists. While the AI can still prevent threats in those circumstances without delay, the flagged data can later be reviewed by humans to boost the amount of available ground truth where it matters most. Alternatively, other means can provide additional data, such as a detonation within the CrowdStrike CROWDSTRIKE FALCON® INTELLIGENCE™ malware analysis sandbox to observe threat behaviors in a controlled environment. Such solutions are based on a paradigm called active learning.  Active learning is a useful way to get the limited resource of human attention spent where it matters the most. AI decisions do not get stalled — the AI will continue to analyze and stop threats. We call this the “fast loop.” The Falcon OverWatch team, among others, analyzes what our AI systems surface and provides an expert disposition, which we feed back into our AI algorithms. Over this route, our AI models receive a constant stream of feedback about where they were successful and where we spotted and stopped novel attacks by other means. The AI learns from this feedback and incorporates it into future detections. We call this part “the long loop.” As a result, our AI gets better constantly as new data enters the system.

Active learning is a useful way to get the limited resource of human attention spent where it matters the most. AI decisions do not get stalled — the AI will continue to analyze and stop threats. We call this the “fast loop.” The Falcon OverWatch team, among others, analyzes what our AI systems surface and provides an expert disposition, which we feed back into our AI algorithms. Over this route, our AI models receive a constant stream of feedback about where they were successful and where we spotted and stopped novel attacks by other means. The AI learns from this feedback and incorporates it into future detections. We call this part “the long loop.” As a result, our AI gets better constantly as new data enters the system.

Proof Points

We prove that this approach is superior every day in the field when we repel adversaries from our customers’ networks, prevent theft of data and ensure that the lifeblood of the companies we serve — their information and intellectual property — is protected. In addition, we have a rigorous testing record of numerous independent third-party evaluations by leading testing organizations such as AV-Comparatives, SE Labs and MITRE. AI-centric vendors tend to avoid tests that penalize false positives — but not CrowdStrike. Public reports from independent testing organizations attest to CrowdStrike’s commitment to transparency, especially with AI becoming a pervasive technology to work with data.

Outside of testing, CrowdStrike was also the first NGAV vendor to make our technology readily available on VirusTotal for public scrutiny, and we provide our technology readily for the research community to utilize on Hybrid Analysis. Transparency is a central tenet of our privacy-by-design approach: CrowdStrike designs its offerings with transparency as a core value so customers can see what exactly is processed, make decisions about how it's processed, and select retention periods.

Final Thoughts

AI is becoming a more commonplace tool to stop cyber threats, but it is important to look beyond the mere presence of an AI algorithm somewhere in the data flow. It is critical to gauge the efficacy of an AI system by understanding where the data is coming from, including the necessary ground truth. Artificial Intelligence can learn only if new facts constantly enter the system at scale, and humans in the loop are a hallmark of a well-designed AI system.

Additional Resources

- View this video to see how Falcon OverWatch proactively hunts for threats.

- Download this data sheet to learn how Falcon OverWatch stops hidden advanced attacks.

- Get a full-featured free trial of CrowdStrike Falcon® Prevent™ and learn how true next-gen AV performs against today’s most sophisticated threats.

- Human Intelligence in Cybersecurity

![Helping Non-Security Stakeholders Understand ATT&CK in 10 Minutes or Less [VIDEO]](https://assets.crowdstrike.com/is/image/crowdstrikeinc/video-ATTCK2-1?wid=530&hei=349&fmt=png-alpha&qlt=95,0&resMode=sharp2&op_usm=3.0,0.3,2,0)

![Qatar’s Commercial Bank Chooses CrowdStrike Falcon®: A Partnership Based on Trust [VIDEO]](https://assets.crowdstrike.com/is/image/crowdstrikeinc/Edward-Gonam-Qatar-Blog2-1?wid=530&hei=349&fmt=png-alpha&qlt=95,0&resMode=sharp2&op_usm=3.0,0.3,2,0)