- What is efficacy, and why are independent tests necessary?

- What is the difference between MITRE ATT&CK® TTPs and the cyber kill chain?

- How can I distinguish between benign and malicious techniques?

- How to build and follow emulation plans based on real-world observations?

- Is my endpoint security solution working?

Finding the right endpoint security solution that will protect an organization from damage and even loss of reputation is never an easy job. Fortunately, companies can turn to independent third-party testing organizations for objective, comprehensive and consistent reporting on endpoint security solutions' efficacy, performance and overall usability. These reports can help in the decision-making process of understanding why one endpoint security solution may be a better fit for their organization than others and how efficacy has huge implications for the total cost of ownership. Organizations are frequently interested in efficacy results. Therefore, when picking an endpoint security vendor, it’s essential to look for a long history of third-party testing that demonstrates efficacy. Business interruptions caused by false positives, quality issues or missed detections can impact an organization’s total cost of ownership (TCO) for a security product. And human operational costs such as how many SOC analysts it takes to operate the software also need to be evaluated. This commitment to testing can also help answer whether an endpoint security solution is working appropriately and delivering on its promise to stop breaches — it helps organizations understand the threat landscape and anticipate and prevent incidents that can lead to potential breaches. Throughout this blog, we will look closely at the benefit of adversary emulation through red teaming in keeping your organization secure. We deep dive into how to replicate, test and measure performance and outcomes throughout adversarial emulations by examining two use cases involving ransomware and backdoor emulation. Showcasing how adversarial emulation can help measure the product’s capability against what actors do in the real world, we follow generic emulation plans based on observations from multiple tactics and techniques used by RaaS groups and backdoors.

What Is Cybersecurity Efficacy?

The simplest definition for efficacy in cybersecurity is an endpoint security solution’s ability to prevent and detect known and unknown threats. However, evaluating efficacy should also involve looking for characteristics such as capability, value, and quality. A security solution that delivers on its mission – for example, to stop breaches – that reduces the total cost of ownership by being easy to integrate and operate, and that is designed to avoid any negative impact on performance or quality of alerts, should be an example of efficacy. But knowing what efficacy is and properly testing for it are different things.

Know the Difference: MITRE TTPs vs. Cyber Kill Chain

The cybersecurity industry regularly uses terms like MITRE ATT&CK and cyber kill chain to describe threats or defensive tactics. But to understand how they apply in endpoint security testing and shape testing scenarios, one must understand what each stands for and how they are used together. MITRE ATT&CK is a framework, not a standard for detection or prevention. It focuses on what real adversaries have done in the past. However, it doesn’t mean that all techniques described in the framework are inherently malicious, are executed in the specific order laid out in the framework, or should immediately be blocked and treated as malicious by endpoint security solutions or security teams. This framework of tactics and techniques is regularly updated with the latest and most recent hacking techniques that adversaries and security researchers find in the wild. The cybersecurity industry uses cyber kill chain — a term derived from a military model — to describe the series of stages or events that trace a cyberattack from early reconnaissance to lateral movement and data exfiltration. In a nutshell, the cyber kill chain describes threats, advanced adversaries and even innovative attacks by understanding each of the stages of an attack.

The primary difference between the MITRE ATT&CK framework and the cyber kill chain is that the ATT&CK matrix lists tactics and techniques and doesn’t propose a specific order of operations, like the kill chain.

Consequently, third-party testing organizations and even red teams use the ATT&CK techniques (categorized under various ATT&CK tactics) at different phases of an attack in a way that adversaries might operate in the real world. For example, an ATT&CK scenario could start with a spear-phishing email as the Initial Access tactic, then move to the Privilege Escalation tactic by using PE injection, and then come back to the Execution tactic by using WMI, PowerShell or other command and script interpreters.

Just Because a Behavior Matches an ATT&CK Technique Doesn’t Mean It’s Malicious

Process Discovery (T1057) is a technique mapped in the MITRE framework, but it’s not necessarily malicious, and if it’s detected, it’s not automatically part of a kill chain. For example, querying process discovery allows an application to identify a running instance that must be terminated before proceeding with an update.

A security solution that detects and reports on this technique every time it’s triggered on an endpoint would result in considerable false positive detections, creating a lot of noise for security teams.

The same is true for legitimate tools like Task Manager, Process Explorer or Windows Management Instrumentation (WMI), which are also often (ab)used by adversaries. However, that does not make them malicious, but they’re mapped in the MITRE ATT&CK framework. Administrators and adversaries use WMI to perform the same actions but for different reasons. Interrogating running processes, checking the state of installed applications or Windows updates, configuring settings, scheduling processes and even enabling or disabling features such as logging are all legitimate activities. However, the context in which they’re performed makes the difference between friend or foe. Detecting and reporting on all of these activities as malicious without a complete picture creates alert fatigue, where the stream of potential threats can bury legitimate signals in noise.

Another technique commonly associated with ransomware behavior is File and Directory Discovery (T1083). Real-world ransomware families use this technique to enumerate all mounted drives or search for files by matching them against a list of file extensions targeted for encryption. A simulation of this technique — for the purpose of validating if an endpoint security solution correctly identifies it — should emulate ransomware's behavior in the wild.

Discovering some of these techniques is difficult even on a good day, let alone distinguishing between which ones are benign and which ones are malicious. Tests and testing scenarios, such as those performed by independent third-party organizations or MITRE, can help businesses understand which endpoint security solution can spot malicious intent and accurately detect and prevent threats. But instead of decorating logs with each spotted MITRE ATT&CK tactic, testing scenarios need to focus on incident accuracy and reducing alert fatigue.

Setting the Right Expectations

The MITRE framework is excellent for visibility across tactics and techniques, but no security vendor will map this framework 1:1. There’s no silver bullet to properly assess the efficacy of an endpoint security solution against all possible attack vectors, tactics, techniques and procedures. Each MITRE technique has unique success criteria in the evaluation. Sometimes it’s appropriate to make it visible through threat hunting or thought detection and prevention, and each test needs to set clear expectations for what success looks like for each TTP under test. This is why independent testing organizations go to great lengths to create scenarios that are comprehensive, accurate, unbiased and as close to reality as possible. For example, there is no universal test or award that assesses the efficacy of endpoint security solutions. Before setting any expectations, it is important to properly document what outcomes are provided by various testing organizations. First of all, no single test determines the industry leader, so don’t expect a single source of truth. Even when companies conduct their own internal testing, they may get inaccurate results. This is a direct consequence of not setting the right expectations. Improperly defining testing expectations, scenarios and results can have a significant impact on testing accuracy. For example, “execute this sample to see if it’s detected” might not be specific enough to support the “it’s detected” conclusion, the same way as “let’s encrypt these 50 files” is not enough to support the claim of “ransomware-like behavior.”

Or detecting a screenshot is irrelevant in terms of detection if it’s not in the context of an attack whose desktop isn’t full of screenshots. Current testing approaches involve either brittle (too specific) or broad detections, which lack the proper context for an incident. For instance, “atomic testing,” which consists of testing against a particular technique without chaining it to others, doesn’t reveal anything about why it is important to merit a detection result or what makes it malicious. As a result, triggering alerts that are either too broad or too specific are a direct consequence of inadequate testing, leading to analyst fatigue or low fidelity. In addition, a poor understanding of real-world threats and adversary behavior can result in misleading test results and output that may not reflect the true real-world capabilities of an endpoint security solution to detect and protect against real-world threats and malicious behavior. For instance, ransomware operators have evolved their operations, especially when engaging in big game hunting (BGH) ransomware techniques. The recent CARBON SPIDER/DarkSide attack on a U.S. fuel pipeline and the REvil ransomware attack that compromised the Kaseya VSA software are recent ransomware incidents where the attack chain is complex when executing the ransomware onto a compromised machine.

When emulating ransomware-like behavior to test the efficacy of an endpoint security solution, understanding how detection engineering works is important when distinguishing between a malicious and a benign MITRE tactic, technique or procedure. Red teams should also emulate adversarial behavior by studying and simulating the in-the-wild attack chains.

Understanding this lifecycle of how adversaries improve their tactics, techniques and procedures means building more effective detection engineering that can reduce the time to detect, respond and recover from threats.

Asking questions like “What do I have to detect?” “What tactics, techniques and procedures are actually relevant?” and “How is this relevant to organizations?” will help identify the recipe for building, managing and reviewing detection capabilities as well as fine-tuning false positives so as not to overburden already overworked SOC teams and analysts.

Behind the Wheel (Red Team Adversarial Emulation)

CrowdStrike is committed to driving improvement through rigorous testing, identifying and analyzing gaps proactively. Through constant adversary emulation of attack tactics, techniques and procedures we uncover attack paths and identify gaps in detection and response capabilities that help us continuously improve our protection capabilities. From a research and red team testing perspective, the goal of such an effort is to measure the product’s capability against what actors really do in the real world, and drive improvement. Performing emulation work enables better understanding of adversaries’ TTPs and how they’re actually used in the wild, and what's really happening under the hood in every stage of an attack. Taking the wheel and diving into this experience can return more information and a different perspective other than the one behind a dashboard or alert. It also provides a way to easily replicate, test and measure performance and outcomes without having to wait for an actual intrusion to take place.

Ransomware Emulation

Emulating ransomware behavior involves understanding the techniques and tools used in either automated or hands-on-keyboard ransomware incidents. The emulation plan should include using the same TTPs that belong to various operators or RaaS groups. The following generic emulation plan was designed based on observations from multiple tactics and techniques employed by RaaS groups. This will help paint a cyber kill chain as close to real-world conditions as possible, helping to simulate an incident accurately.

The proposed emulation plan involving threat actor tools or malware depicts an operator that employs a smash-and-grab method. Here is what should be considered:

- Use it “as is,” if possible.

- Use a sample and make changes in the binary directly (such as, modify IPs / URLs to point back to your “attacker” machine — this approach will efficiently work on scripts).

- Observe what the sample is doing. For example, the following kill chain scenario includes techniques observed in actual Big Game Hunting (BGH) intrusions and recreate it in a defanged manner. This is the ideal way of doing proper emulation and testing, but it is resource consuming as it involves development work.

- Innovatively emulate the sample, focusing more on the technique. If patching a sample isn’t possible, consider creating a script and packing it in a .exe.

The testing scenario occurs in an environment consisting of an attacker machine running Kali (the Debian-derived Linux distribution used for pen-testing and forensics) and two victim workstations joined to a Domain Controller. The end objective for this emulation is to deploy ransomware across all machines in the test range. For full visibility into the steps, try running the kill chain in a “Detection only” posture with maximum engine visibility settings enabled to better assess the endpoint security solution’s capabilities during each stage of the attack.

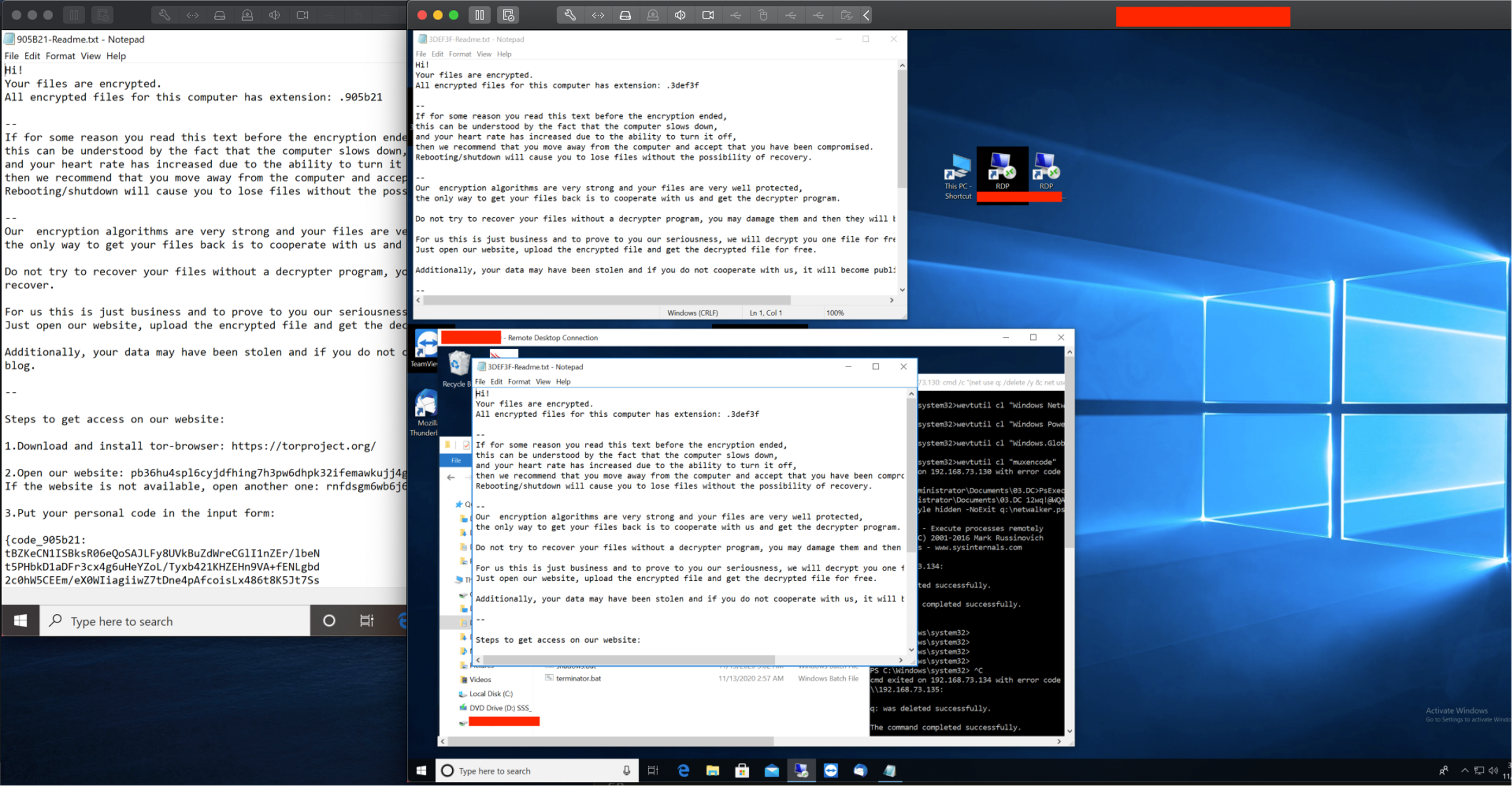

The first stage of the scenario involves simulating initial access through password spray against a target workstation with exposed RDP using crowbar, trying over 1000+ users and multiple password combinations. Once the user’s password is identified, the attacker connects via RDP to the victim with rdesktop

(an open-source UNIX client for connecting to Windows Remote Desktop Services, capable of natively speaking RDP) and mounts a remote shared drive. After performing an initial set of discovery commands (whoami, arp, systeminfo, nltest, and various net.exe commands), ransomware operators copy their toolkit to an unusual user folder (%UserProfile%\Documents). Some of these tools range from LaZagne, Mimikatz and PsExec to AdFind and COMahawk, along with several versatile batch scripts tied together to help attackers achieve a high execution speed. COMahawk (weaponizing CVE-2019-1405 and CVE-2019-1322) would create a new localadmin user account, enabling the attacker to further elevate privileges to NTAUTHORITY/SYSTEM

using PsExec. From here, attackers can start running batch scripts that perform the following:

| deffoff.bat | Disables and deletes Windows Defender registry keys and services |

| 4admin.bat | Weakens the host security posture, by replacing utilman.exe and sethc.exe with taskmgr.exe, opening up RDP and modifying numerous RDP related settings |

| mimi_rights.bat | Modifies Wdigest registry entry to maximize Mimikatz results |

| "automim" suite | A collection of .cmd, .vbs and .bat files that automate the execution of Mimikatz and Lazagne. |

| adfind.bat | Starts the AdFind Active Directory query utility, runs various commands for recon purposes, and outputs results to various .txt files. |

Once attackers grab credentials belonging to a sysadmin that has also logged on the host earlier, they perform lateral movement via RDP to a workstation that belongs to the system administrator. Attackers then copy a new series of tools over RDP. These tools include a copy of the legitimate dsquery.exe utility — a complex batch script that performs extensive discovery commands, including searching various Active Directory objects — and a complete suite of the legitimate third-party Windows Password Recovery Tools by Nirsoft. By leveraging them, the attacker will ultimately grab the Domain Administrator password, which he’ll use to hop onto the Domain Controller via RDP.

Figure 2. CrowdScore incident workbench showing activity and lateral movement from sysadmin workstation to DC (Click to enlarge)

Figure 2. CrowdScore incident workbench showing activity and lateral movement from sysadmin workstation to DC (Click to enlarge)Once on the server, the attacker will disable Windows Defender via a PowerShell command and will drop the last part of his toolkit:

| PsExec.exe | Well-known administration utility that enables command-line process execution on remote machines |

| ips.txt | Text file containing a list of targeted machines |

| Rclone.exe, rclone.conf | Open source tool that is used to exfiltrate files to a number of cloud storage providers; for this scenario, the .conf file was setup to exfiltrate data to a Dropbox account |

| grab.bat | Batch script that searches for various document file extensions and copies them over to a staging folder on the Windows Server |

| killav.bat | Batch script that stops security product processes and services |

| terminator.bat | Batch script that stops various services and processes prior to encryption, to close any potential locks on files |

| shadows.bat | Batch script that utilizes vssadmin.exe, wmic.exe, bcedit.exe and wbadmin.exe to delete shadows and backups |

| clear.bat | Batch script that clears all Windows event logs with WevtUtil.exe |

| antiforensics.bat | Batch script that utilizes fsutil to zero out artifacts and to delete the staging folder |

| netwalker.ps1 | PowerShell script that includes an embedded Netwalker .dll and runs it directly in memory |

PsExec was leveraged to mount a share on all systems using the previously acquired Domain Administrator password and then execute all of the above scripts.

psexec.exe -accepteula @targets.txt cmd /c "(net use q: /delete /y &; net use q: \\domaincontroller.domain\C$\share /user:domain\Administrator &; q:\script.bat )"

Using the above method, the attacker collects .doc, .docx, .pdf, .xls and .csv files from all of the target systems and copies them back over to a local staging folder on the DC that was mounted as a share. The attacker then runs rclone and exfiltrates the collected data to a Dropbox account that he controls.

In preparation for encryption, the attacker attempts to kill multiple processes and Falcon services across the environment by pushing the associated batch scripts. Shadow copies and backups on all domain hosts are deleted, making a recovery more difficult and increasing the victim's pressure to pay the ransom. Netwalker is executed similarly as well. To thwart incident responders, the attacker ultimately runs his last batch scripts on all hosts to clear event logs and to set zero data for all the tools and scripts from their staging folder.

Hands-on-keyboard post-exploitation that delivers ransomware poses a significant and ever-increasing threat to companies as adversaries evolve and incorporate more techniques and capabilities in their toolkits. Successful adversarial emulation involves much more than just executing a ransomware payload in a controlled environment to test detection capabilities but emulating the entire attack chain to understand the full breadth of protection and detection capabilities across all attack stages.

Backdoor Emulation

The following kill chain scenario emulates a simple backdoor behavior inspired by one of the known Carbon Spider backdoors currently in use. Harpy (aka “GRIFFON”) is the threat actor’s primary backdoor of choice, alongside Sekur. Harpy’s functionality is modular and is retrieved from the C2 server for runtime execution.

Figure 6. ProcessExplorer view with actual CARBON SPIDER Harpy backdoor execution (Click to enlarge)

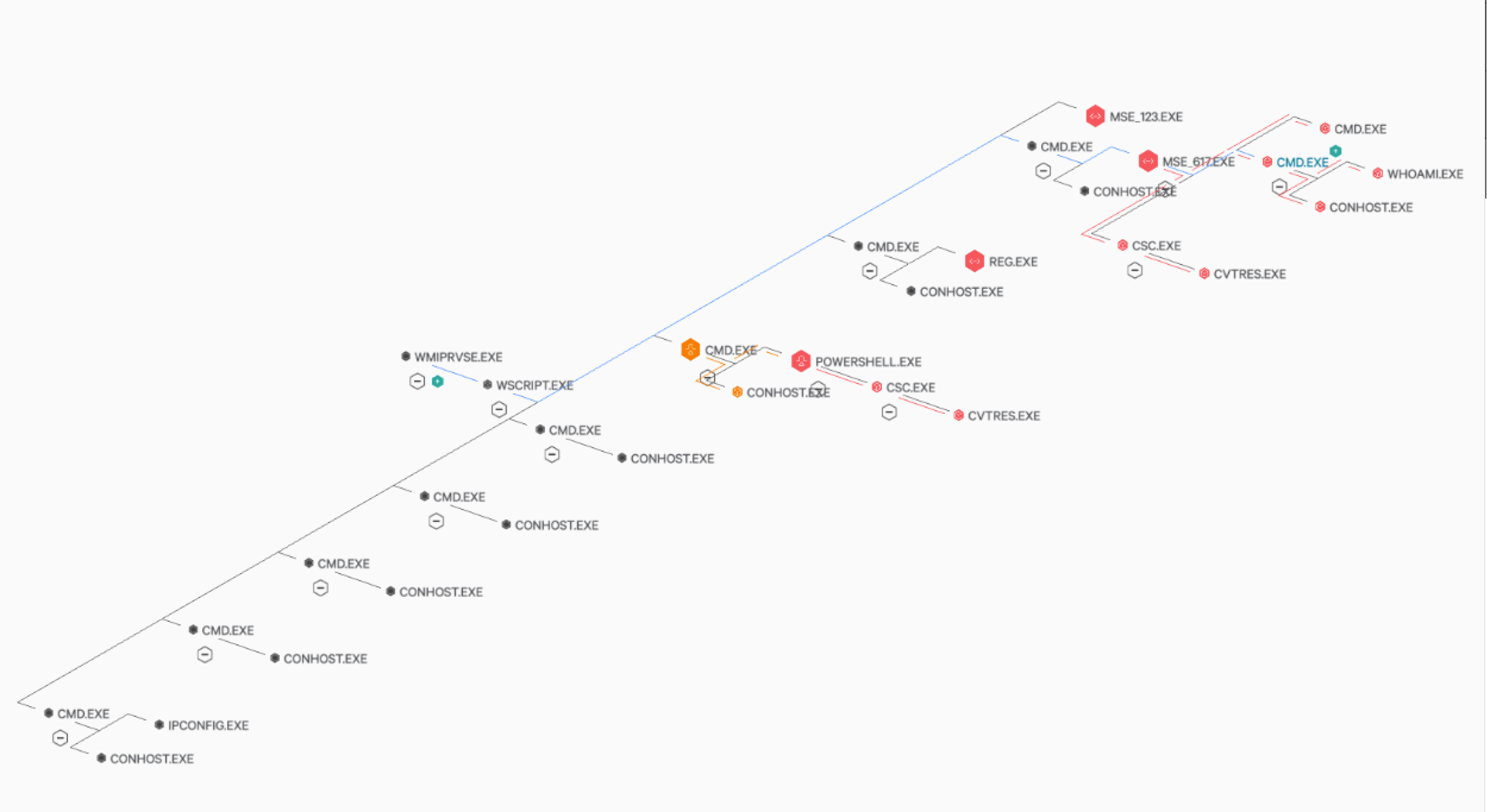

Figure 6. ProcessExplorer view with actual CARBON SPIDER Harpy backdoor execution (Click to enlarge)As seen in the image above, the kill chain involves a high-fidelity emulation of the real-world behavior for the adversary to assess detection and remediation capabilities properly. For our example, besides modifying the C2 addresses in an actual Domenus VBS downloader and the main Harpy JS module and pointing them back to our infrastructure, we also needed to emulate the Harpy C2. This was done by modifying a SimpleHTTPServer Python script to monitor specific HTTP POST request formats and URI path values while removing the XOR encryption mechanism altogether to keep things as simple as possible. The server was configured to respond with various modules and payloads, controlling the delivery through a simple POST passed through CURL, which contains a state change identifier. This adversarial emulation scenario also includes the option to deliver all available payloads automatically.

Figure 7. Communication between Harpy and the emulated C2, showing various payloads being served to the victim (Click to enlarge)

Figure 7. Communication between Harpy and the emulated C2, showing various payloads being served to the victim (Click to enlarge)The various payloads that Harpy receives are delivered as standalone modules, able to gather information, screenshots, persistence, run a Metasploit stage, and a module designed to drop PE files on disk in various locations.

| Module Description | Module Details |

| Information gathering module 1 | The module performs initial profiling of the host, including but not limited to networking adapter information, a list of running processes, installed software, antivirus and a list of files on the user's desktop. |

| Information gathering module 2 | The module performs extensive system information gathering using Windows Management Instrumentation (WMI) queries, as seen in the actual Harpy module, including but not limited to username, hostname, Active Directory information, process list and desktop monitor type and screen size. |

| PowerShell Screenshot module | Harpy executes obfuscated PowerShell contained in %TEMP%\requires.txt, which is a lightweight screenshot module that can capture the active window or the user’s desktop window. The screenshot is written to disk, then uploaded back to the C2. |

| Persistence module | The module performs registry keys modifications to HKCU\Software\Microsoft\Windows NT\CurrentVersion\Winlogon which will cause Winlogon to load the system shell and execute the Harpy JS file for persistence. |

| Powershell Masquerading and Metasploit module | The module copies the PowerShell binary to Appdata\Local\Temp\mse_617.exe and uses it to run a Powershell stager for Metasploit. |

| Drop PE files on disk in various unusual location | The module can drop various actor tools to disk (like TinyMet and PAExec) and renames them using the same naming convention as above (for example mse_123.exe). |

This testing scenario takes place in a simple environment, consisting of an attacker machine and a domain-joined victim workstation. We’re running the kill chain in a “Detection only” posture with maximum engine visibility settings enabled to get full visibility into the steps. This adversarial emulation scenario involves simulating initial access through a phishing email. The user downloads and runs the contents of a .zip attachment from his Downloads folder. The archive contains info.vbs, which is the modified Domenus VBS downloader. This will automatically return some initial piece of information to the attacker. The C2 will then proceed to serve the Harpy backdoor, writing it to disk as C:\Users\\AppData\Roaming\some.js and then will execute it with wscript.exe. The modules described above will then execute one by one, giving the attacker a reverse shell in the process to continue post-exploitation.

The attacker then runs a UAC bypass module to raise the shell’s integrity level from medium to high, allowing him to further elevate to NTAUTHORITY/SYSTEM through Metasploit’s getsystem command, which performs named-pipe impersonation. The attacker will perform various

credential harvesting activities, including gathering saved credentials from browsers and passwords and hashes using Mimikatz. The attacker will then migrate to another process (winlogon.exe) and start a keylogger to capture more passwords or valuable information typed in by the user. After opening a cmd shell, the attacker issues various net.exe discovery commands and will attempt to collect data from the local system by searching for document files across the user’s profile. It will then copy them in a staged location to exfiltrate them. The attacker will conclude the activity by issuing a command to clear event logs. Given the simple test setup, the scenario did not involve any lateral movement attempts using the dropped tooling. However, this could easily be part of a more extensive test range.

This emulation offered enhanced visibility into the actor’s tooling and behavior and opportunities to research detections targeting Suspicious WMI queries, scripting, and define new behavioral rules.

Can Your Solution Stop Breaches?

When looking for an endpoint security solution, start by ensuring that it has a long-standing history of being tested, getting consistent results and having platform parity with major operating systems deployed across an organization. AV-Comparatives, SE Labs and MITRE run various tests and scenarios that test the efficacy of security solutions to identify tactics and techniques associated with known or unknown threats and adversaries. What’s less straightforward when evaluating an endpoint security solution is the cost of stopping breaches. For example, an endpoint security solution may be great at flagging suspicious tactics and techniques and mapping them to the MITRE framework. Still, those alerts may not indicate malicious behavior and may end up creating alert fatigue for your organization’s IT or SOC teams. This directly translates into the person-hour costs of triaging benign incident alerts, which can become difficult to manage. This could also involve time spent restoring systems into production because of remediation processes triggered by false alarms. Stopping breaches is about correctly identifying malicious behavior early in the cyber kill chain and blocking the threat or adversary before the final stage of the attack is reached, while correctly identifying malicious intent without creating false incident alerts. To better understand how to test endpoint security, it’s important to know how testing scenarios work. This type of testing involves lots of planning, and a very firm grasp of the tactics and techniques that adversaries use in the wild. Independent testing organizations and red teams both use the same principles and concepts for emulation planning. In the recent AV-Comparatives mid-year Business Main-Test Series of 2021, the CrowdStrike Falcon® platform achieved a 99.5% protection rate in the Real-World Malware Protection Test; a 99.8% protection rate and zero false positives on business software in the Malware Protection Test (March 2021); and an AV-C Score of 85 in the Business Performance Test (June 2021), demonstrating minimum performance impact on protected endpoints. These results reflect our consistent commitment to participating in independent third-party testing, with CrowdStrike Falcon® Pro™ winning a fourth consecutive AV-Comparatives’ Approved Business Security Product award in July 2021. The Falcon platform also achieved 99.5% protection in the Real-World Protection Test. In SE Labs testing, CrowdStrike has a track record of 10 AAA ratings in SE Labs Enterprise Endpoint protection reports, dating back to March 2018. Recently, Falcon received the highest AAA rating in the Q1 2021 Enterprise Endpoint Protection evaluation, with 100% legitimate accuracy rating and 100% protection against targeted attacks. Falcon was also named Best New Endpoint Solution in SE Labs’ annual report. Previous SE Labs Breach Response reports also awarded Falcon a AAA rating, highlighting its strength in stopping breaches.

Additional Resources

- Learn how the CrowdStrike Falcon® platform provides comprehensive protection across your organization, workers and data, wherever they are located.

- Learn more about CrowdStrike Falcon® Insight™ endpoint detection and response (EDR) by visiting this product webpage, reading this data sheet and watching this video demo.

- See how CrowdStrike Falcon® Complete™ stops Microsoft exchange server zero-day exploits.

- Watch CrowdStrike CEO, George Kurtz, introduce CROWDSTRIKE FALCON® XDR during his opening keynote session at Fal.Con 2021 — view the recording.

- Get a full-featured free trial of CrowdStrike Falcon® Prevent™ and see for yourself how true next-gen AV performs against today’s most sophisticated threats.

![Helping Non-Security Stakeholders Understand ATT&CK in 10 Minutes or Less [VIDEO]](https://assets.crowdstrike.com/is/image/crowdstrikeinc/video-ATTCK2-1)

![Qatar’s Commercial Bank Chooses CrowdStrike Falcon®: A Partnership Based on Trust [VIDEO]](https://assets.crowdstrike.com/is/image/crowdstrikeinc/Edward-Gonam-Qatar-Blog2-1)