Protecting cloud workloads from zero-day vulnerabilities like Log4Shell is a challenge that every organization faces.

Initial Anomaly Detections Using New Context

By analyzing data from millions of sensors, we can identify invariants and encode them as high-confidence detections. We know, for example, that redis does not typically spawn an interactive shell anywhere in its process tree. It also does not modify crontab. Both actions are extremely security-relevant, so it makes sense to use these insights to alert when redis does either.

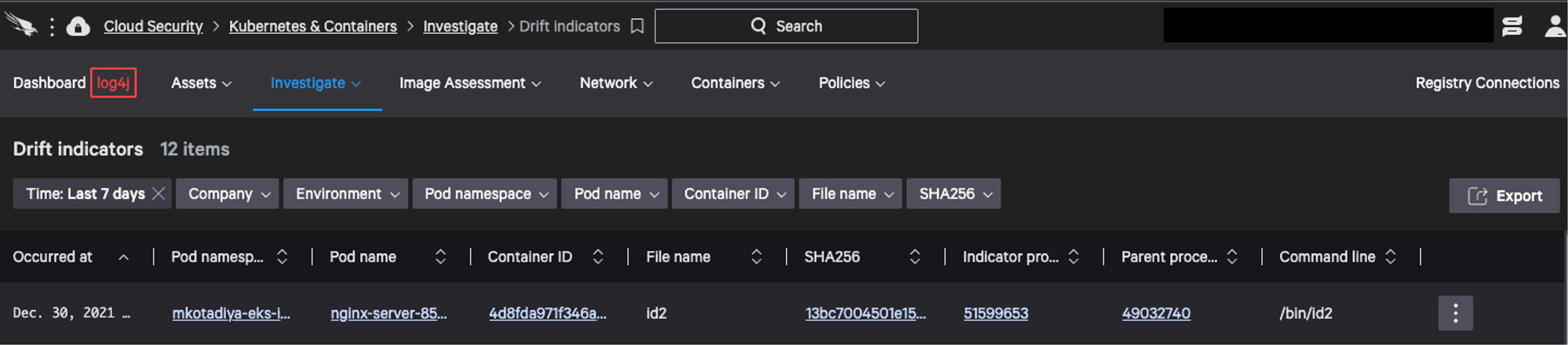

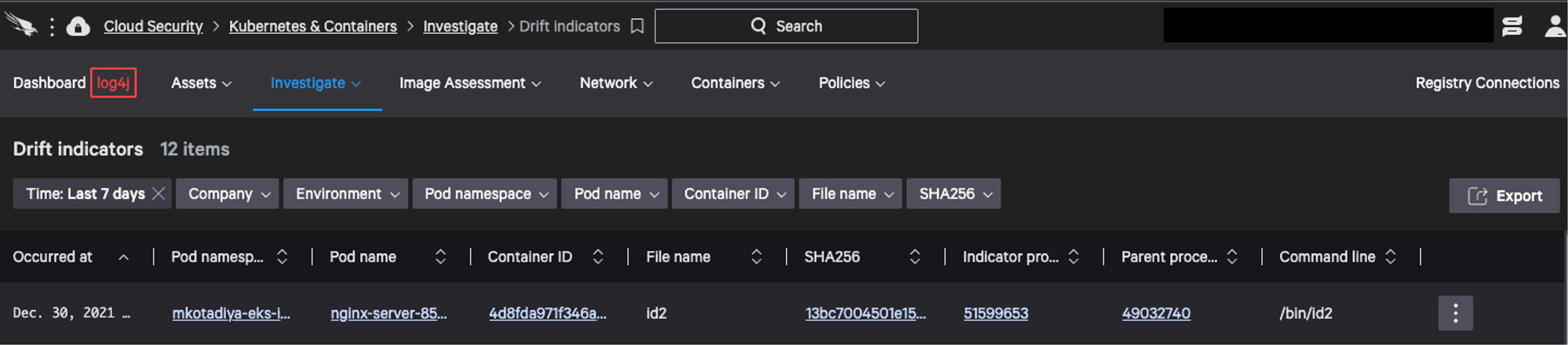

Newly added telemetry makes it easier to capture what is and isn’t normal for a particular containerized workload such as: Figure 1. CrowdStrike Falcon® drift dashboard highlights containers that have deviated from expected behavior by adding new executables after launch (Click to enlarge)

Figure 1. CrowdStrike Falcon® drift dashboard highlights containers that have deviated from expected behavior by adding new executables after launch (Click to enlarge)

When a cloud vulnerability is published, organizations can try to identify impacted artifacts through software composition analysis, but even if they’re able to identify all impacted areas, the patching process can be cumbersome and time-consuming. As we saw with Log4Shell, this can become even more complicated when the vulnerability is nearly ubiquitous.

But patching doesn’t address the risk organizations face during the time period of zero-day discovery and publication. According to MIT Technology Review, there were at least 66 zero-day vulnerabilities discovered in 2021 — the most on record in a single year.

Preventing zero-day exploitation from impacting an organization requires a proactive approach that automatically detects and protects customers against post-exploitation activity.

Why Use Anomaly Detection?

We understand our various adversaries and their core objectives well, which usually allows us to find them regardless of how they get into a system. For example, a cryptojacker will typically download and start up a coin miner. A ransomware-focused group will usually end up trying to encrypt a lot of files. And all groups, regardless of their objective, will probably conduct some reconnaissance. As the result of moving our workloads to the cloud, though, our attack surface has become larger, more complex and faster-changing than ever. At the same time, the army of strongly motivated threat actors trying to find stealthy new ways to exploit this hard-to-defend surface grows every day. There will always be cases of clever actors behaving in novel ways and evading defenses set to look for specific, expected patterns. Log4Shell, for example, makes it easy for attackers to inject behavior into trusted applications, leveraging the power of the Java virtual machine (JVM) to achieve their objectives in new ways.

However, the shift to cloud technologies also gives us a new advantage. Cloud-based workloads tend to be small, single-purpose and immutable, and we have visibility into earlier pre-deployment stages of the development cycle that give us useful information about their components and intended configuration. Unlike a general-purpose computer, we can predict workload behavior within certain contexts. This means that, in addition to trying to predict which specific abnormal behaviors attackers might introduce to the system, we can define “normal behavior” and flag any significant deviation from it — including novel attacks on undisclosed vulnerabilities. Profiling and anomaly detection are

complicated techniques with checkered pasts.

They are certainly not sufficient as a detection strategy on their own, and great care has to be taken to avoid a flood of false positives. We need to introduce them thoughtfully and correctly in a way that complements our existing attacker-focused detections and provides another layer of protection against zero-day and known threats without negating the benefits of tried and true approaches.

New Context Enables Anomaly Detections

To enable the first iteration of anomaly detections, we added context to the Falcon sensor, allowing us to segment its telemetry in ways that make it more predictable. If we know that certain events come from an Apache Tomcat instance hosting a particular service in a particular Kubernetes cluster, for example, we can make better judgments about whether they describe typical behavior than we can about events from an arbitrary Linux server we know nothing about. The new context includes information attached to process trees:- The specific long-running application that spawned the tree (e.g., “Weblogic”)

- Whether the tree is the result of a Docker exec command

- Whether a tree appears to contain signs of hands-on keyboard activity

Initial Anomaly Detections Using New Context

By analyzing data from millions of sensors, we can identify invariants and encode them as high-confidence detections. We know, for example, that redis does not typically spawn an interactive shell anywhere in its process tree. It also does not modify crontab. Both actions are extremely security-relevant, so it makes sense to use these insights to alert when redis does either.

Newly added telemetry makes it easier to capture what is and isn’t normal for a particular containerized workload such as:

- Direct connection to kubelet made (potential lateral movement attempt)

- External process memory accessed (potential credential theft)

- New executables added to a running container

- Interactive sessions started in a container

- Horizontal port scan

- Normal port scan

- And more

Gain Understanding with More Sophisticated Anomaly Detections

Several key pieces will make more sophisticated anomaly detections possible — detections capable of automatically identifying subtle changes in specific services within specific environments, including:- More powerful user policies

- An understanding of higher-level cloud workload constructs like “service”

- Telemetry aggregation across these constructs and over time

- More information from build and deploy phases

make any connections to the external internet. Clusters designated “production” do not normally have users exec’ing into containers and installing packages (and so on). As an example in Falcon sensor 6.35, we can examine basic process profiling of individual containers and enhanced drift detection, as seen in Figure 1. With rich enough telemetry and context, we can also begin to bring machine learning to bear on the problem, although that’s a topic for a different post.

Figure 1. CrowdStrike Falcon® drift dashboard highlights containers that have deviated from expected behavior by adding new executables after launch (Click to enlarge)

Figure 1. CrowdStrike Falcon® drift dashboard highlights containers that have deviated from expected behavior by adding new executables after launch (Click to enlarge)Block Zero-days Before They’re Exploited

Reliable detection of deviations from expected behavior can be very powerful for stopping breaches. A Java workload running in a container with a vulnerable version of Log4J will begin behaving differently once compromised. It might make new outgoing network connections, add or run new executable files, or edit critical system files it doesn’t normally touch. All of these things would set off alerts even before Log4Shell was in the headlines. Within cloud workload protection, we remain attacker-focused and will always research and detect the specific tactics of significant threat actors. However, by taking advantage of the opportunities the new, cloud-centric technology stack provides, we’re beginning to build another layer of protection through anomaly detection. Both layers will block the next zero-day before anyone even knows it exists.Additional Resources

- Learn more about stopping cloud breaches in this blog: Why You Need an Adversary-focused Approach to Stop Cloud Breaches

- For tips on how to address IT security challenges, read this blog: 5 Common Hybrid IT Security Challenges and How to Overcome Them.

- Learn how you can stop cloud breaches with CrowdStrike unified cloud security posture management and breach prevention for multi-cloud and hybrid environments — all in one lightweight platform.

- Learn more about how Falcon Cloud Security enables organizations to build, run and secure cloud-native applications with speed and confidence.

- See if a managed solution is right for you. Find out about Falcon Cloud Workload Protection Complete: Managed Detection and Response for Cloud Workloads.

![Helping Non-Security Stakeholders Understand ATT&CK in 10 Minutes or Less [VIDEO]](https://assets.crowdstrike.com/is/image/crowdstrikeinc/video-ATTCK2-1)

![Qatar’s Commercial Bank Chooses CrowdStrike Falcon®: A Partnership Based on Trust [VIDEO]](https://assets.crowdstrike.com/is/image/crowdstrikeinc/Edward-Gonam-Qatar-Blog2-1)